Attending Apple Intelligence and App Intents workshop at Paris

This Wednesday, Apple organized a workshop in Paris entitled “Enhance your apps with Apple Intelligence and App Intents”. An appealing title that immediately triggered my interest and pushed me to register.

Was I selected? Of course I was. This article would not exist otherwise! About a hundred people and I were invited to Station F, for an all-day workshop led by an Apple technology evangelist.

It’s really thrilling to see Apple increasingly inclined to organize local events all around the world. And I couldn’t be more delighted.

A starting point

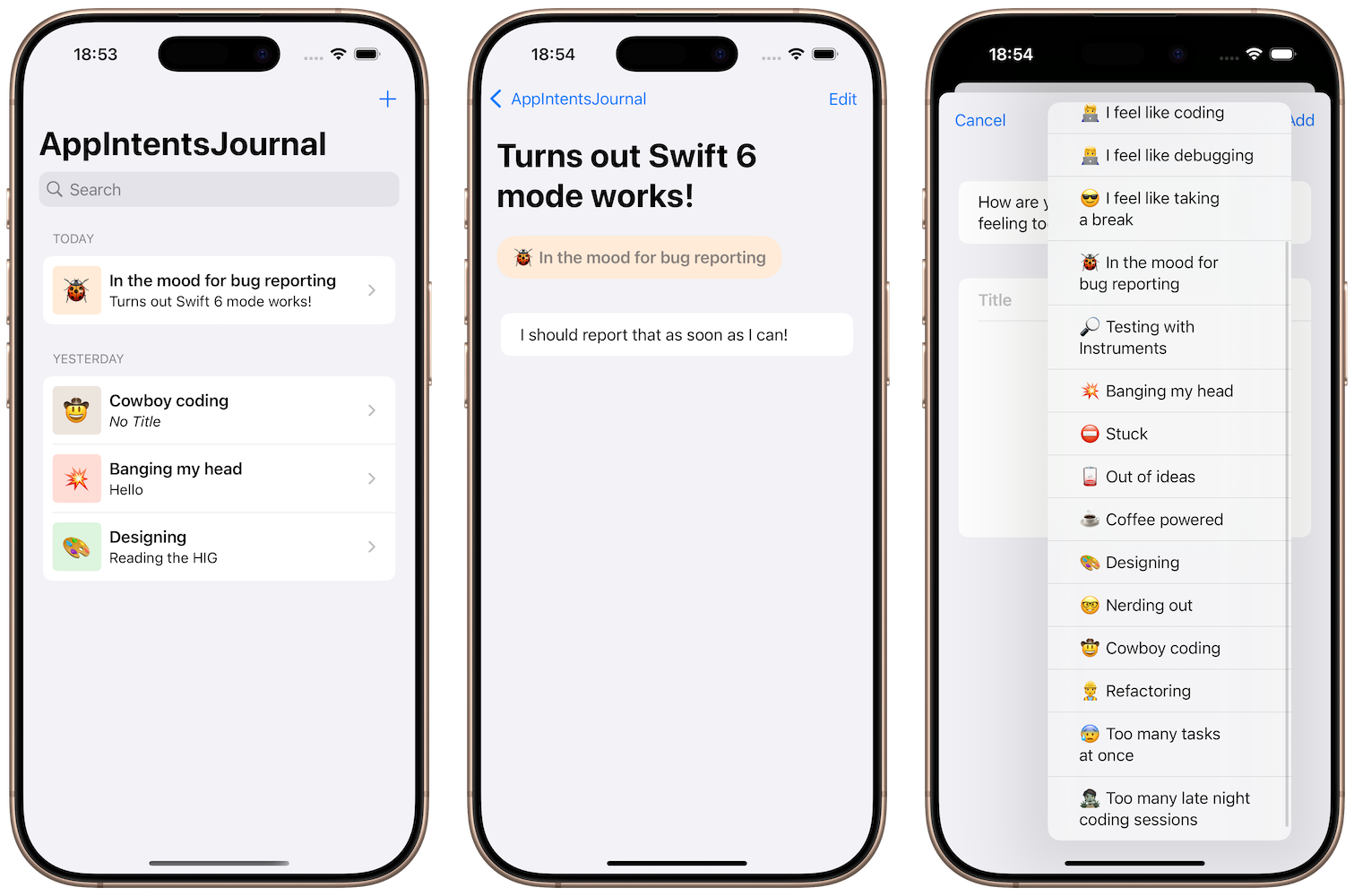

When the session started, we were provided with a sample app, “AppIntentJournal”, which is basically a journaling app to record journal entries with:

- A coding state of mind

- A title

- A message

This app uses Swift Data, SwiftUI … and is interestingly still using Swift 5 language mode1.

The AppIntentsJournal sample app provided by Apple

The goal of the workshop is to enable Apple Intelligence and system integrations to this app by using:

- App Intents, a way to provide the API of what your app can do to the system

- Core Spotlight, to provide the content of your app to the system

- Control Center, to get a quick entrypoint to create a new journal entry

- Writing tools and Genmoji, a mere bonus at this point. The start of the show is App Intents.

We were promised that those steps would power the upcoming “context-aware” Siri that’ll use what our app can provide in many more new use cases than before. However, we were not shown a demo of that.

Also, due to Apple’s regional restrictions in the EU, actual testing of Writing tools and Genmoji was done by our speaker on his non-EU device with Apple Intelligence enabled. Thankfully, Apple Intelligence is supposed to arrive, at least in France, sometime in April with iOS 18.4.2

The new “assistant” schemas

Introduced as Macros last November, they give the system better understanding of your app entities and features when they match certain “patterns”, a.k.a schemas.

Many schemas are available today, including browser, emails, photo and videos, but also journaling. All available schemas are documented in the App intent domains documentation.

If your entity does not match any predefined App intent domain schema, no panic! I was confirmed that Apple Intelligence also work with “standard” AppEntity implementations, as long as they are provided to the system.3

Our first task is to implement an @AssistantEntity matching the journal.entry schema.

Implementing a schema is as simple as:

- annotating a struct with the

@AssistantEntity(schema: .journal.entry)macro - providing the properties that are expected by the schema.

But even simpler, Xcode comes with predefined code snippets, allowing us to type journal_entry and get:

@AssistantEntity(schema: .journal.entry)

struct JournalEntryEntity {

struct JournalEntryEntityQuery: EntityStringQuery {

func entities(for identifiers: [JournalEntryEntity.ID]) async throws -> [JournalEntryEntity] { [] }

func entities(matching string: String) async throws -> [JournalEntryEntity] { [] }

}

static var defaultQuery = JournalEntryEntityQuery()

var displayRepresentation: DisplayRepresentation { "Unimplemented" }

let id = UUID()

var title: String?

var message: AttributedString?

var mediaItems: [IntentFile]

var entryDate: Date?

var location: CLPlacemark?

}Notice how none of those properties are marked with @Property; neither is the struct implementing AppEntity protocol. All of those are added for you by the magic of the Macro.

Aside of matching the predefined schema (enforced by the macro at build time), it’s no different to create an AppEntity from an @AssistantEntity.

And we can therefore add convenience to our JournalEntryEntity and JournalEntry objects:

struct JournalEntryEntity {

...

- let id = UUID()

+ let id: UUID

...

@Parameter(title: "State of Mind")

var mood: DeveloperStateOfMind?

init(item: JournalEntry) {

id = item.journalID

title = item.title

message = item.message?.asAttributedString

entryDate = item.entryDate

mood = item.stateOfMind

}

}

extension JournalEntry {

var entity: JournalEntryEntity {

JournalEntryEntity(item: self)

}

}Next, not only App Entities have associated schemas. Intents have them as well!

Journaling provides (as of today):

- createAudioEntry

- createEntry

- deleteEntry

- search

- updateEntry

And they all come with their own code snippets as well.

Here, we implemented first the createEntry AppIntent using journal_createEntry and got:

@AssistantIntent(schema: .journal.createEntry)

struct CreateJournalEntryIntent {

var message: AttributedString

var title: String?

var entryDate: Date?

var location: CLPlacemark?

@Parameter(default: [])

var mediaItems: [IntentFile]

func perform() async throws -> some ReturnsValue<JournalEntryEntity> {

.result(value: _)

}

}with very little work left to make it functional.

Just like the previous Macro, conformance to AppIntent protocol, and @Property annotations comes for free thanks to the @AssistantIntent macro.

Otherwise, the func perform() async throws is no different from a classical AppIntent, but comes with a predefined schema that Siri and Apple Intelligence expect and understand.

This is also what powers the interoperability of concepts for many very different apps.

Dependency injection with AppDependencyManager

Next up is implementing the @AssistantIntent(schema: .journal.search) intent, that should open the app searching the right term.

Yet again, code snippets to the rescue with journal_search gives us the boilerplate:

@AssistantIntent(schema: .journal.search)

struct SearchJournalEntriesIntent: ShowInAppSearchResultsIntent {

static var searchScopes: [StringSearchScope] = [.general]

var criteria: StringSearchCriteria

func perform() async throws -> some IntentResult {

.result()

}

}Since this intent is going to open the app at the right place for searching, we need to inject our navigation as a dependency. Turns out it’s pretty easy to do:

struct SearchJournalEntriesIntent: ShowInAppSearchResultsIntent {

...

@Dependency var navigationManager: NavigationManager

...

func perform() async throws -> some IntentResult {

await navigationManager.openSearch(with: criteria.term)

return .result()

}

}Note that @Dependency is an alias of @AppDependency

Now we have to fulfil our part of the contract by providing the NavigationManager object early in the app lifecycle, often within the .init() of the App struct:

@main

struct AppIntentsJournalApp: App {

let navigationManager: NavigationManager

init() {

let navigationManager = NavigationManager()

AppDependencyManager.shared.add(dependency: navigationManager)

self.navigationManager = navigationManager

}

var body: some Scene {

...

}

}AppIntents and CoreSpotlight interoperability

This is new with iOS 18, and it is actually an impressive feature: You can describe the whole search and open from Spotlight feature using App Intents.

First, you’ll conform to the new IndexedEntity protocol. The technique here is to extend the provided defaultAttributeSet that will already contains valuable information, and enrich it with your own entity info:

extension JournalEntryEntity: IndexedEntity {

var attributeSet: CSSearchableItemAttributeSet {

let attributes = defaultAttributeSet

attributes.title = title

if let message {

attributes.contentDescription = String(message.characters[...])

}

return attributes

}

}Then, you have to actually index the content.

A good place to do so is pretty much when you create the data itself:

func newEntry(...) async throws -> JournalEntry {

let modelContext = ...

let entry = ...

modelContext.insert(entry)

try await CSSearchableIndex.default().indexAppEntities([entry.entity])

return entry

}Note that you’ll also have the responsibility to update/delete those indexes depending on your entities lifecycle in your app!

The final piece of the puzzle is to tell the system how to open the specific item the user might choose from a Spotlight search. And there is an intent for that: OpenIntent.

This one exists since the introduction of AppIntents, and explicitly says how to open the app to a specific target, here our AppEntity:

struct OpenEntryIntent: OpenIntent {

static let title: LocalizedStringResource = "Open Journal Entry"

static let description: IntentDescription = "Shows the details for a specific journal entry."

static let openAppWhenRun: Bool = true

@Dependency var navigationManager: NavigationManager

@Parameter(title: "Journal Entry")

var target: JournalEntryEntity

@MainActor

func perform() async throws -> some IntentResult {

guard let entry = ... else {

throw AppIntentError.Unrecoverable.entityNotFound

}

navigationManager.openJournalEntry(entry)

return .result()

}

}Weirdly, we have to specify openAppWhenRun to true to actually open the app.

This was not required for the ShowInAppSearchResultsIntent that brought that for free.

We also have to specify the target that will be opened by the intent, here our JournalEntryEntity that will be provided as an input by the system.

And that all we need to have a full Spotlight experience for your app!

Searching, and opening an item with minimal effort!

Custom intents and Apple Intelligence

As I teased earlier (and more on that topic later), Apple Intelligence will be able to rely on things that are not matching any predefined assistant schema.

The technique that was shown during the workshop is to implement the AppShortcutsProvider. This one also provides Siri phrases that will provide context on what kind of action is associated with your intent.

It is therefore recommended not to neglect providing many Intent for your app, and enriching them with Siri phrases to get them wired with the upcoming upgraded Siri!

It’ll also show them as quick actions in both Spotlight and Shortcuts apps:

You app quick actions will also power Apple Intelligence

Control widget

The Control part was a pretext to show how to build controls with WidgetKit. But since the control action is powered by the AppIntent framework, it also shows how to deal with multiple targets for intents.

In this example, we added every required files for compiling to the widget target; increasing both the executable size and build time.

But the speaker mentioned two techniques to fully decouple the control’s intent from the underlying code:

- Achieving much better code separation with packages, providing the necessary

- Using universal links to uncouple fully the thing4

It was also explained why the OpensIntent result exists:

It allows to decouple the action of the control, that will execute in the widget extension, and the underlying intent that will actually perform the action, that will run by the fully launched App.

struct ComposeControlAction: AppIntent {

static let title: LocalizedStringResource = "Compose Journal Entry"

static var isDiscoverable = false

// This runs on the extension

func perform() async throws -> some IntentResult & OpensIntent {

return .result(opensIntent: ComposeNewJournalEntry())

}

}

struct ComposeNewJournalEntry: AppIntent {

...

// Because openAppWhenRun is true …

static let openAppWhenRun: Bool = true

...

// … this is executed by the App

func perform() async throws -> some IntentResult {

...

}

}At the end, we successfully got the control working:

We're now a tap away from logging new exciting entries!

Writing tools & Genmoji

The final part of this workshop was focused on two concrete Apple Intelligence features, and their behavior implications to your app.

Like I announced in the summary, they were a small bonus.

For Writing tools, we implemented a textfield delegate method to prevent altering the eventual monospaced code that would be in our way.

If you rewrite your journal entry, it’s better to keep the code as it is:

func textView(_ textView: UITextView, writingToolsIgnoredRangesInEnclosingRange enclosingRange: NSRange) -> [NSValue] {

let excluded: [NSRange] = ...

return excluded as [NSValue]

}The NSValue return type is the proof that people that built the writing tools are more than 40 years old. That, or TextKit 😂

For Genmojis, it was a technical reminder that Genmoji are supported in Rich Text format, and that it requires specific data formatting to keep them and save them. And the code was just about changing from RTF format (that does not support Genmoji and would strip them away) to RFTD format.

You can read more about the technical part of Genmoji by looking at the underlying NSAdaptativeImageGlyph documentation.

let data = try string.data(

from: NSRange(location: 0, length: string.length),

- documentAttributes: [.documentType: NSAttributedString.DocumentType.rtf]

+ documentAttributes: [.documentType: NSAttributedString.DocumentType.rtfd]

)Wrap-up

I’m always keen on participating technical events, whether they’re workshops or meeting organized by Apple, or conferences.

Indeed, at those events, you get to meet incredible folks sharing the same concerns and passion about code. And you also gather new ideas and concepts to make your apps and upcoming work better.

Although I already played, a lot, with App Intents framework, this event gave me a new energy and ideas to improve Padlok, SharePal, and working harder on my new ongoing project.

It’s also hard to emphasize enough how crucial it is to provide an API of your app using App Intents to the system. This is, for you, an incredible opportunity of providing entrypoint of your app all across the system (and by the same time, more opportunities of sales).

And as it was often seen as “a feature only for Shortcuts power users”, with Apple Intelligence, and Siri taking those API over, it is no longer true.

Long story short: if you don’t dive fully into App Intents, you’re missing out!

Asking about this, I was told that the AppIntents framework is not yet entirely Swift 6 ready. Therefore, I was able to enable it and make things to work correctly on my side. Even though the initial sample code had a couple of issues that required to be dealt with to allow building. ↩︎

As it was announced at the very end of that press release 🇫🇷 ↩︎

More on that in an upcoming blog post. ↩︎

at the cost of a domain name configured for universal links. ↩︎

Don’t miss a thing!

Don't miss any of my indie dev stories, app updates, or upcoming creations!

Stay in the loop and be the first to experience my apps, betas and stories of my indie journey.